Abstract

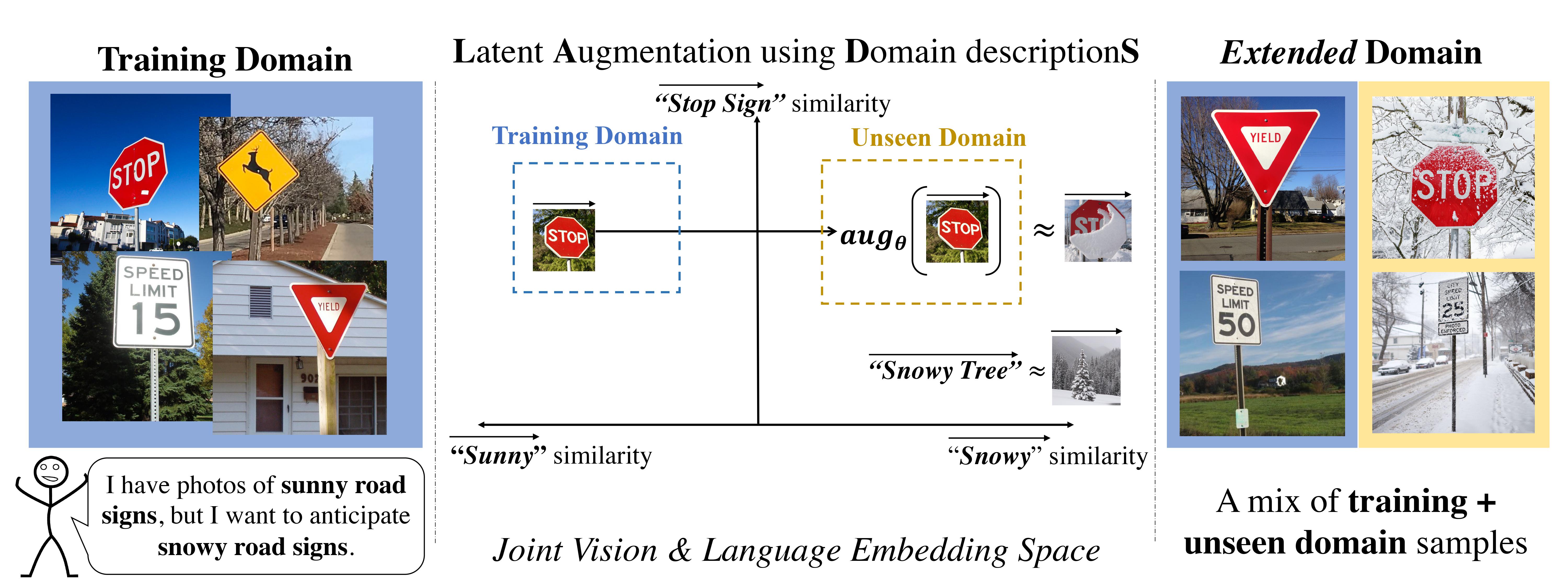

It is expensive to collect training data for every possible domain that a vision model may encounter when deployed. We instead consider how simply verbal-izing the training domain (e.g. “photos of birds”) as well as domains we want to extend to but do not have data for (e.g. “paintings of birds”) can improve robustness. Using a multimodal model with a joint image and language embedding space, our method LADS learns a transformation of the image embeddings from the training domain to each unseen test domain, while preserving task relevant information. Without using any images from the unseen test domain, we show that over the extended domain containing both training and unseen test domains, LADS outperforms standard fine-tuning and ensemble approaches over a suite of four benchmarks targeting domain adaptation and dataset bias.

Let the task be to classify Puffin vs. Sparrow. The training data Dtraining contains photos of the two classes but we would like to extend our classifier to paintings as well: that is, Dunseen. We aim to do this using the text descriptions of the training and new domain, ttraining and tunseen, respectively. The augmentation network faug is trained to transform image embeddings from Dtraining to Dunseen using a domain alignment loss LDA and a class consistency loss LCC. When LDA is low, the augmented embeddings are in the new domain but may have drifted from their class. When LCC is low, the augmented embeddings will retain class information but may fail to reflect the desired change in domain. faug aims to augment every image embedding to a space with low domain alignment loss and low class consistency loss, resulting in faug(I(x)) having an image embedding similar to a painting of a Puffin. Note that the hallucinated image embeddings on the right are a pictorial representation of the effect of each loss function and not actually generated by LADS.

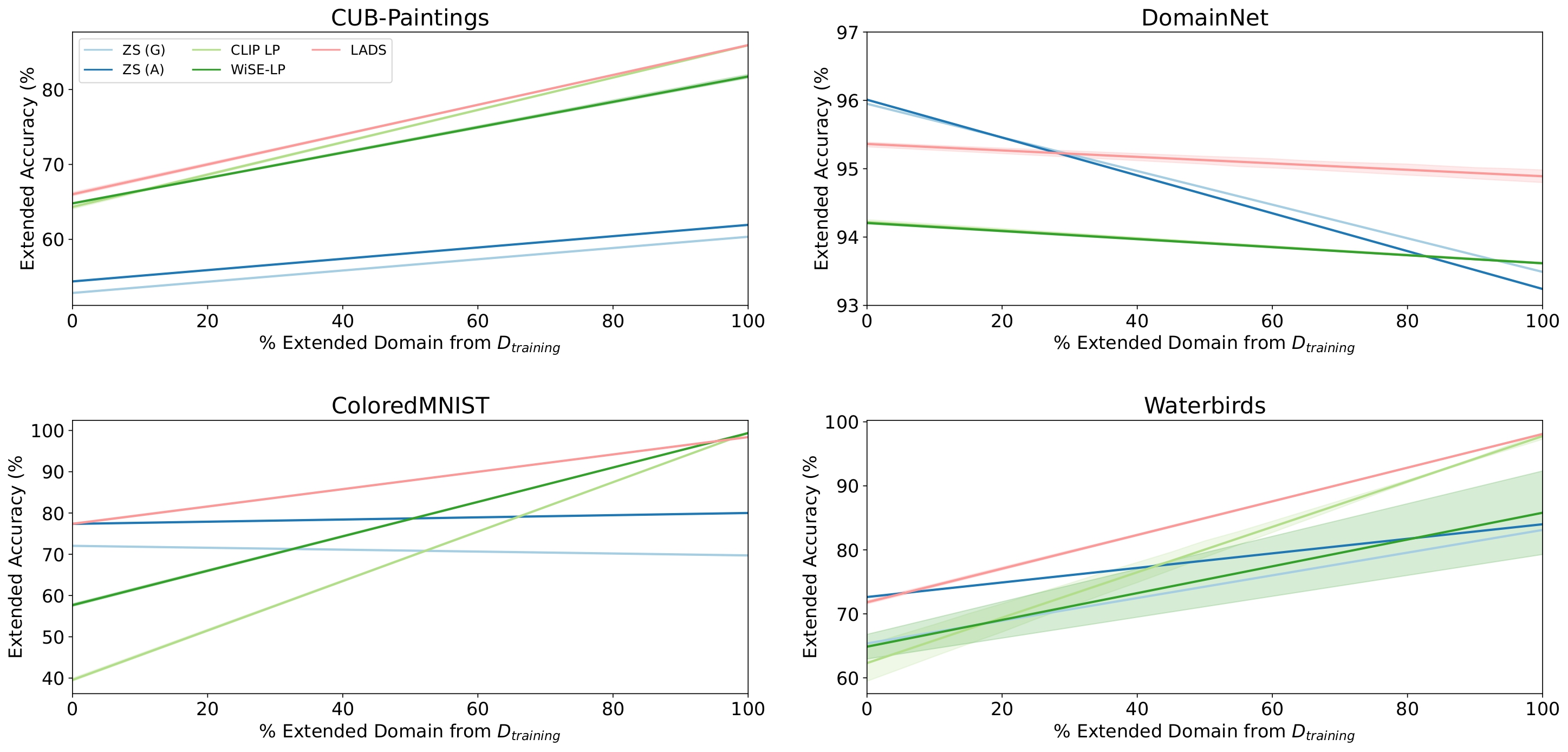

Results

Extended Domain Accuracy vs Amount of In-Domain Test Data. For each dataset, we compute the extended accuracy as a weighted average of the proportion of testing data from Dtraining and Dunseen. As the proportion of the extended domain belonging to Dtraining increases, the extended domain accuracy of fine-tuning methods and LADS is more favorable than zero-shot.

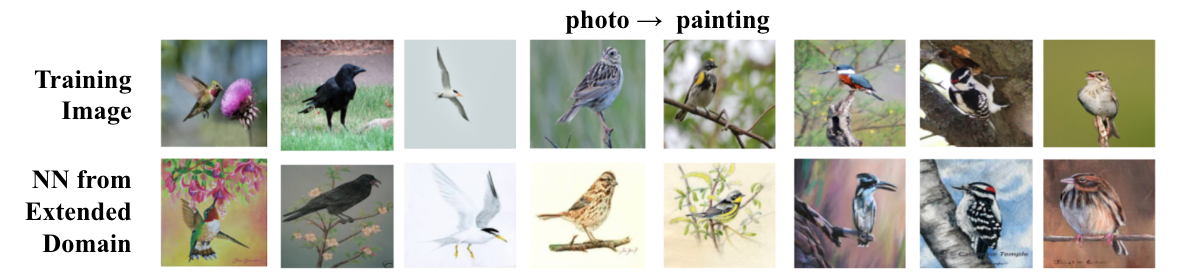

Assessing Augmentation Quality

Nearest Neighbors for LADS on CUB-Paintings. The top row shows training images with the label being the intended domain augmentation for that embedding. The bottom row shows the nearest neighbor of the augmentation in the extended domain. LADS is able to augment photos to paintings while retaining class-specific information.

Loss Function Ablation

Nearest Neighbors for LADS when ablating the loss on Waterbirds. The top row shows training images with the label on top being the intended domain augmentation for the image's embedding, and the label on the bottom being the class of the image. The following rows correspond to choices of loss functions and show the nearest neighbor of the embedding in the test set. The images with red outlines fail to augment to the intended domains, and the ones with red labels are augmented to a different class. Frequently the domain alignment loss is able to augment the embedding into the intended domain although sometimes it does not retain class-specific information. The class consistency loss (both generic and domain specific) retains class-specific information but often fails to augment the embedding to the intended domain.

BibTeX

If you find our work useful, please cite our paper:

@misc{https://doi.org/10.48550/arxiv.2210.09520,

doi = {10.48550/ARXIV.2210.09520},

url = {https://arxiv.org/abs/2210.09520},

author = {Dunlap, Lisa and Mohri, Clara and Guillory, Devin and Zhang, Han and Darrell, Trevor and Gonzalez, Joseph E. and Raghunathan, Aditi and Rohrbach, Anja},

keywords = {Computer Vision and Pattern Recognition (cs.CV), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Using Language to Extend to Unseen Domains},

publisher = {arXiv},

year = {2022},

copyright = {Creative Commons Attribution 4.0 International}

}